I’ve been thinking a bit about the efficacy of QE, the potential benefits of the Bank of England’s Funding for Lending Scheme (FLS) [BoE, HM Treasury] and the new paper Michael Woodfoord presented at Jackson Hole [pdf here] (it’s a classic Woodford paper, by the way, even if it is is almost entirely equation free: a little difficult to wrap your head around, but ultimately very, very insightful). Woodford’s conclusion starts with an excellent statement of the problem:

Central bankers confronting the problem of the interest-rate lower bound have tended to be especially attracted to proposals that offer the prospect of additional monetary stimulus while (i) not requiring the central bank to commit itself with regard to future policy decisions, and (ii) purporting to alter general financial conditions in a way that should affect all parts of the economy relatively uniformly, so that the central bank can avoid involving itself in decisions about the allocation of credit.

The interest-rate lower bound here is not necessarily zero, but rather whatever rate is paid on excess reserves, which may indeed be equal to zero, but need not be. In the US, interest on reserves for depository institutions has been 0.25% since Oct 2008; in the UK it has been Bank Rate, currently 0.5%, since Mar 2009. In principle, one might push the interest rate paid on reserves into negative territory, but such an action would come at the cost of destroying a subset of the money market and with a very real risk that economic agents (banks or, worse, businesses and households) would instead choose to hold their money in the form of physical currency.

Woodford advocates a strong form of forward guidance — that is, the abandonment of restriction (i) — as the optimal policy at the present time, on the basis that all monetary policy is, fundamentally, about expectations of the future. In particular, he uses the paper to make an argument for nominal GDP level targeting.

This is vitally important stuff, but in this post I want to talk about quantitative easing, in the general sense of the phrase, or what Woodford far more accurately refers to as “balance sheet policies.”

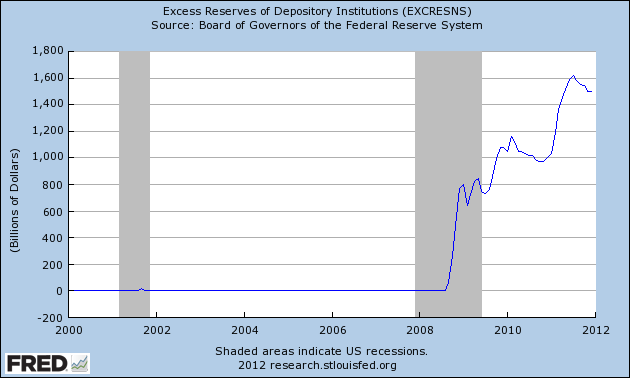

First up is the purchase of short-dated safe assets, paid for with the creation of new reserves. For the financial sector, this means giving up a safe, liquid asset with a steady revenue stream in return for money. In normal times, the financial sector might then seek to increase their lending, providing a multiplier effect, but with interest rates on short-dated safe assets at the same level as interest paid on reserves, the financial position of the bank does not change with the purchase, so their incentive to lend can’t increase. In this case, the short-dated safe asset has become a perfect substitute for money and, absent any forward guidance, such a policy can have no effect on the real economy. Krugman (1998) and Eggertson and Woodford (2003) provide two-period and infinite-horizon treatments respectively. Forward guidance in this setting might be anything from the private sector observing the purchases and inferring a more accommodative policy stance in the future (and the central bank doing nothing to disabuse them of that belief) to an outright statement from the central bank that the increase in reserves will be permanent.

Next up is the idea of purchasing long-dated safe assets, or even long-dated risky assets. Woodford stresses that this can be decomposed into two distinct parts: An initial expansion of the central bank’s balance sheet via the purchase of short-dated, safe assets and then an adjustment of the composition of the balance sheet by selling short-dated safe assets and buying long-dated assets. Since the first step is thought to be ineffective (by non-Monetarists, at least), any traction should be obtained in the second step.

But because the second step represents either an adjustment in the relative supply of short- and long-dated government debt (in the case of limiting oneself to safe assets) or an allocation of capital directly to the real economy (in the case of purchasing risky assets), this is arguably fiscal policy rather than monetary and should perhaps be better done by the Treasury department. Putting that concern to one side, I want to consider why it might, or might not, work.

The standard argument in favour is that of portfolio rebalancing: now holding extra cash and facing low yields on long-dated safe assets, a financial actor seeking to equate their risk-adjusted returns across assets should choose to invest at least some of the extra cash in risky assets (i.e. lending to the real economy). Woodford emphasises that this story implicitly requires heterogeneity across market participants:

But it is important to note that such “portfolio-balance effects” do not exist in a modern, general-equilibrium theory of asset prices — in which assets are assumed to be valued for their state-contingent payoffs in different states of the world, and investors are assumed to correctly anticipate the consequences of their portfolio choices for their wealth in different future states — at least to the extent that financial markets are modeled as frictionless. It is clearly inconsistent with a representative-household asset pricing theory (even though the argument sketched above, and many classic expositions of portfolio-balance theory, make no reference to any heterogeneity on the part of private investors). In the representative-household theory, the market price of any asset should be determined by the present value of the random returns to which it is a claim, where the present value is calculated using an asset pricing kernel (stochastic discount factor) derived from the representative household’s marginal utility of income in different future states of the world. Insofar as a mere re-shuffling of assets between the central bank and the private sector should not change the real quantity of resources available for consumption in each state of the world, the representative household’s marginal utility of income in different states of the world should not change. Hence the pricing kernel should not change, and the market price of one unit of a given asset should not change, either, assuming that the risky returns to which the asset represents a claim have not changed.

He goes on to stress that if the central bank were to take some risk off the private sector, the risk still remains and, in the event of a loss, the reduction in central bank profits to the treasury would require a subsequent increase in taxes. Consequently, a representative household would experience the loss no matter whether it was formally held by itself or the central bank. Crucially, too …

The irrelevance result is easiest to derive in the context of a representative-household model, but in fact it does not depend on the existence of a representative household, nor upon the existence of a complete set of financial markets. All that one needs for the argument are the assumptions that (i) the assets in question are valued only for their pecuniary returns [John here: i.e. their flow of revenue and their expected future resale value] — they may not be perfect substitutes from the standpoint of investors, owing to different risk characteristics, but not for any other reason — and that (ii) all investors can purchase arbitrary quantities of the same assets at the same (market) prices, with no binding constraints on the positions that any investor can take, other than her overall budget constraint. Under these assumptions, the irrelevance of central-bank open-market operations is essentially a Modigliani-Miller result.

[…]

Summing over all households, the private sector chooses trades that in aggregate precisely cancel the central bank’s trade. The result obtains even if different households have very different attitudes toward risk, different time profiles of income, different types of non-tradeable income risk that they need to hedge, and so on, and regardless of how large or small the set of marketed securities may be. One can easily introduce heterogeneity of the kind that is often invoked as an explanation of time-varying risk premia without this implying that any “portfolio-balance” effects of central-bank transactions should exist.

Of the two requirements for this irrelevance result, the second is clearly not true in practice, so large-scale asset purchases should, in principle, work even in the absence of any forward guidance, although the magnitude of the efficacy would be in doubt.

On the first, Woodford does acknowledge some work by Krishnamurthy and Vissing-Jorgensen (2012) which shows that US government debt possesses non-pecuniary qualities that are valued by the financial sector. In particular, safe government debt is often required as collateral in repo transactions and this requirement should give such assets value above that implied by their pure pecuniary returns. However, as pointed out by Krishnamurthy and Vissing-Jorgensen in a discussion note (pdf), to the extent that this channel is important, it implies that central bank purchases of long-dated safe assets can even be welfare reducing.

To see why this is so, I think it best to divide the universe of financial intermediaries into two groups: regular banks and pure investment shops. Pure investment shops have, collectively, particularly stable funding (think pension funds) although the funds might swoosh around between individual investment shops. Regular banks have some stable funding (from retail deposits), but also rely on wholesale funding.

Up until the financial crisis of 2008, regular banks’ wholesale funding was done on an unsecured basis. There was no collateral required. There was very little asset encumbrance. But since the crisis (and, indeed, arguably because of it), regular banks have had essentially no access to unsecured lending. Instead, banks have been forced to rely almost entirely on secured borrowing (e.g. through covered bonds at the long end or repos at the short end) for their wholesale funding. In addition to this, new regulations have been (or are being) put in place that increase their need to hold safe assets (i.e. government debt) even if unsecured borrowing is available.

QE has therefore acted through two, broad channels. In the first, portfolio rebalancing may still have worked through the pure investment shops. Having sold their government bonds and now holding cash, they reinvested the money but since the yields on government bonds were now lower relative to other asset classes, they put a larger fraction of that money into equity and corporate bond markets. To the extent that such investment shops are not able to perfectly offset the central bank’s trade, or are unable to full recognise their need to bear any potential losses from any risk the central bank takes on, large non-financial companies (NFCs) with access to stock and bond markets should therefore have seen a reduction in the price of credit and, in principle, should have been more willing to undertake investment.

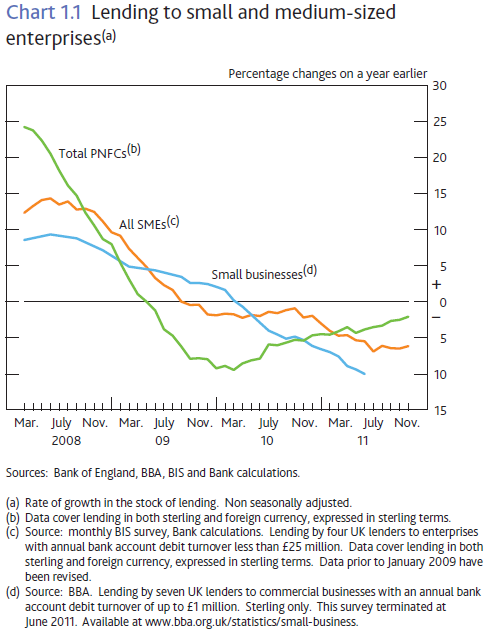

On the other hand, QE has also served to lower the supply of eligible collateral at precisely the time when demand for it among regular banks has shot up. The banks have then been faced with an awful choice: either pay the extra high cost to get the required collateral (buying it off the pure investment shops), or deleverage so that they don’t need the funding any more. As a result, their funding costs will have gone up as a direct result of QE and if they have any pricing power at all (and they do), then interest rates available to households and small-to-medium sized enterprises (SMEs) will be forced to be higher than they would otherwise have been. No matter which option banks choose (and most likely they would choose a combination of the two), a negative supply (of credit) shock to the real economy would occur as a result.

If this second broad channel (through regular banks) were to outweigh the first (through pure investment shops), then QE focused on the purchase of long-dated safe assets would, in aggregate, have a negative effect on the economy. I believe it is this very possibility that has given both the Federal Reserve and the Bank of England pause in their consideration of additional asset purchases.

Of course, if the central bank were not to buy long-dated safe assets but were instead to purchase long-dated risky assets (bundles of corporate bonds, MBS, etc), the supply of safe assets needed for collateral purposes would not be artificially reduced and, to the extent that portfolio rebalancing helps at all, the full efficacy would be obtained. However, such a strategy would go against the principle that central banks ought to stay away from the decisions regarding the allocation of credit.

All of which is why, I suspect, that the Bank of England has decided to go for their Funding for Lending Scheme. At it’s heart, the FLS is a collateral swap. The BoE gives banks gilts and the banks give the BoE bundles of their mortgages and SME loans, plus interest. The banks can then use the gilts to obtain funding on the wholesale market, while the interest that banks pay the BoE is a decreasing function of how much additional lending the banks make to the real economy. The mortgages and SME loans that the banks give the BoE will have a haircut applied for safety. It’ll be pretty tricky to get just right, but in principle it should be able to offset any increase in funding costs that QE may have imposed.

A clear majority of credit creation in Britain takes place via regular banks, so this has the potential to have quite a dramatic effect. We’ll just have to wait and see …