I have some questions about on the Baltic Dry Index (a rough guide to the price of moving dry goods by sea – more detail available here):

Research Economist at the Bank of England

I have some questions about on the Baltic Dry Index (a rough guide to the price of moving dry goods by sea – more detail available here):

Something very interesting happened to the share price of Volkswagen this week. The FT has the story:

Volkswagen’s shares more than doubled on Monday after Porsche moved to cement its control of Europe’s biggest carmaker and hedge funds, rushing to cover short positions, were forced to buy stock from a shrinking pool of shares in free float.

VW shares rose 147 per cent after Porsche unexpectedly disclosed that through the use of derivatives it had increased its stake in VW from 35 to 74.1 per cent.

…

[T]he sudden disclosure meant there was a free float of only 5.8 per cent – the state of Lower Saxony owns 20.1 per cent – sparking panic among hedge funds. Many had bet on VW’s share price falling and the rise on Monday led to estimated losses among them of €10bn-€15bn ($12.5bn-$18.8bn).

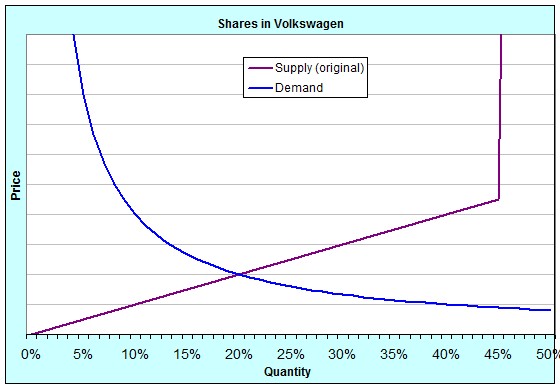

For my students in EC102, this is interesting because of the shifts in Supply and Demand and the elasticity of those curves. The buying and selling of shares in companies is a market just like any other. Here’s an idea of what the Supply and Demand curves for shares in Volkswagen were originally:

The quantity is measured as a percentage because you can only ever buy up to 100% of a company. Notice that the supply curve suddenly rockets upwards at around 45%. That’s because originally, 55% of the shares of Volkswagen weren’t available for sale. 20% was owned by the state of Lower Saxony and 35% by Porsche, and they weren’t willing to sell at any price [In reality, if you were to offer them enough money, they might have been willing to sell some of their shares, but the point is that it would have had to have been a lot]. We say that for quantities above 45%, the supply of shares was highly, even perfectly, inelastic.

Notice, too, that the demand curve is also extremely inelastic at quite low quantities. That is because a lot of hedge funds had shorted the VW stock. Shorting (sometimes called “short selling” or “going short”) is when the investor borrows shares they don’t own in order to sell them at today’s price. When it comes time to return them, they will buy them on the open market and give them back. If the price falls over that time, they make money, pocketing the difference between the price they sold at originally and the price they bought at eventually. A lot of hedge funds were in that in-between time. They had borrowed and sold the shares, and were then hoping that the price would fall. The demand at very low quantities was inelastic because they had to buy shares to pay back whoever they’d borrowed them from, no matter which way the price moved or how far.

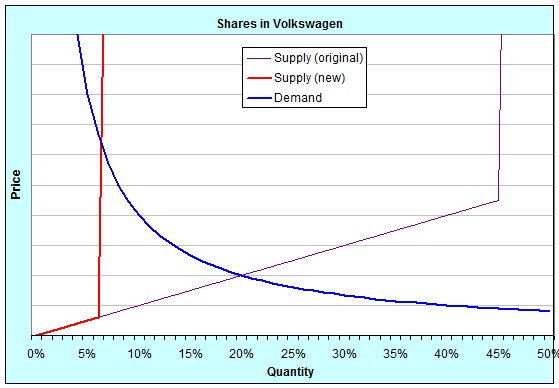

On Monday, Porsche surprised everybody by announcing that they had (through the use of derivatives like warrants and call options), increased their not-for-sale stake from 35% to a little over 74%. This meant that instead of 45% of the shares being available for sale on the open market, only 6% were. It was a shift in the supply curve, like this:

Because at such low quantities both supply and demand were very inelastic, the price jumped enormously. Last week, the price finished on Friday at roughly €211 per share. By the close of trading on Monday, it had reached €520 per share. At the close of trading on Tuesday, it was €945 per share. In fact, during the day on Tuesday it at one point reached €1005 per share, temporarily making it the largest company in the world by market capitalisation!

Who said that first-year economics classes aren’t fun?

Somebody much smarter than I am was kind enough to read my little post on Endogenous Growth Theory. At lunch today, they drew attention to this item that I mentioned:

I’m not aware of anything that tries to model the emergence of ground-breaking discoveries that change the way that the economy works (flight, computers) rather than simply new types of product (iPhone) or improved versions of existing products (iPhone 3G). In essence, it seems important to me that a model of growth include the concept of infrastructure.

The question was raised:

Could it be that times of significant social change have a tendency to coincide with with the introduction (i.e. either the invention or the adoption) of new forms of infrastructure? [*] A new type of mobile phone hardly changes the world, but the wide-spread adoption of mobile telephony in a country certainly might change the social dynamic in that country.

It’s something to ponder …

[*] My intelligent friend is not an economist and would probably prefer to think of this as a groundbreaking discovery rather than just the development of a new type of infrastructure.

Following on from yesterday, I thought I’d give a one-paragraph summary of how economics tends to think about long-term, or steady-state, growth. I say long-term because the Solow growth model does a remarkable job of explaining medium-term growth through the accumulation of factor inputs like capital. Just ask Alwyn Young.

In the long run, economic growth is about innovation. Think of ideas as intermediate goods. All intermediate goods get combined to produce the final good. Innovation can be the invention of a new intermediate good or the improvement in the quality of an existing one. Profits to the innovator come from a monopoly in producing their intermediate good. The monopoly might be permanent, for a fixed and known period or for a stochastic period of time. Intellectual property laws are assumed to be costless and perfect in their enforcement. The cost of innovation is a function of the number of existing intermediate goods (i.e. the number of existing ideas). Dynamic equilibrium comes when the expected present discounted value of holding the monopoly equals the cost of innovation: if the E[PDV] is higher than the cost of innovation, money flows into innovation and visa versa. Continual steady-state growth ensues.

It’s by no means a perfect story. Here are four of my currently favourite short-comings:

New Scientist has a feature this week blaming the unsustainable destruction of the environment on an obsession with economic growth and calling for a move to a growth-free world. The answers to the questions raised by the collection of articles (all essentially repeating each other) are straightforward and widely recognised:

You don’t think this all this is possible? Of course it’s possible. Here is an article from Der Spiegel from April 2008 talking about solar power and the Sahara desert. Here is a map from that same article:

The caption reads: “The left square, labelled “world,” is around the size of Austria. If that area were covered in solar thermal power plants, it could produce enough electricity to meet world demand. The area in the center would be required to meet European demand. The one on the right corresponds to Germany’s energy demand.”

If the cost of coal- and gas-fired electricity production were high enough, this would happen so fast it would make an historian’s head spin.

Arnold Kling, speaking of the credit crisis and the bailout plans in America, writes:

What I call the “suits vs. geeks divide” is the discrepancy between knowledge and power. Knowledge today is increasingly dispersed. Power was already too concentrated in the private sector, with CEO’s not understanding their own businesses.

But the knowledge-power discrepancy in the private sector is nothing compared to what exists in the public sector. What do Congressmen understand about the budgets and laws that they are voting on? What do the regulators understand about the consequences of their rulings?

We got into this crisis because power was overly concentrated relative to knowledge. What has been going on for the past several months is more consolidation of power. This is bound to make things worse. Just as Nixon’s bureaucrats did not have the knowledge to go along with the power they took when they instituted wage and price controls, the Fed and the Treasury cannot possibly have knowledge that is proportional to the power they currently exercise in financial markets.

I often disagree with Arnold’s views, but I found myself nodding to this – it’s a fair concern. I’ve wondered before about democracy versus hierarchy and optimal power structures. I would note, however, that Arnold’s ideal of the distribution of power in proportion to knowledge seems both unlikely and, quite possibly, undesirable. If the aggregation of output is highly non-linear thanks to overlapping externalities, then a hierarchy of power may be desirable, provided at least that the structure still allows the (partial) aggregation of information.

So Barack Obama is easily outstripping John McCain both in fundraising and, therefore, in advertising. I’m hardly unique in supporting the source of Obama’s money – a multitude of small donations. It certainly has a more democratic flavour than exclusive fund-raising dinners at $20,000 per plate.

But if we want to look for a cloud behind all that silver lining, here it is: If Barack Obama wins the 2008 US presidential election, Republicans will be in a position to believe (and argue) that he won primarily because of his superior fundraising and not the superiority of his ideas. Even worse, they may be right, thanks to the presence of repetition-induced persuasion bias.

Peter DeMarzo, Dimitri Vayanos and Jeffrey Zwiebel had a paper published in the August 2003 edition of the Quarterly Journal of Economics titled “Persuasion Bias, Social Influence, and Unidimensional Opinions“. They describe persuasion bias like this:

[C]onsider an individual who reads an article in a newspaper with a well-known political slant. Under full rationality the individual should anticipate that the arguments presented in the article will reect the newspaper’s general political views. Moreover, the individual should have a prior assessment about how strong these arguments are likely to be. Upon reading the article, the individual should update his political beliefs in line with this assessment. In particular, the individual should be swayed toward the newspaper’s views if the arguments presented in the article are stronger than expected, and away from them if the arguments are weaker than expected. On average, however, reading the article should have no effect on the individual’s beliefs.

[This] seems in contrast with casual observation. It seems, in particular, that newspapers do sway readers toward their views, even when these views are publicly known. A natural explanation of this phenomenon, that we pursue in this paper, is that individuals fail to adjust properly for repetitions of information. In the example above, repetition occurs because the article reects the newspaper’s general political views, expressed also in previous articles. An individual who fails to adjust for this repetition (by not discounting appropriately the arguments presented in the article), would be predictably swayed toward the newspaper’s views, and the more so, the more articles he reads. We refer to the failure to adjust properly for information repetitions as persuasion bias, to highlight that this bias is related to persuasive activity.

More generally, the failure to adjust for repetitions can apply not only to information coming from one source over time, but also to information coming from multiple sources connected through a social network. Suppose, for example, that two individuals speak to one another about an issue after having both spoken to a common third party on the issue. Then, if the two conferring individuals do not account for the fact that their counterpart’s opinion is based on some of the same (third party) information as their own opinion, they will double-count the third party’s opinion.

…

Persuasion bias yields a direct explanation for a number of important phenomena. Consider, for example, the issue of airtime in political campaigns and court trials. A political debate without equal time for both sides, or a criminal trial in which the defense was given less time to present its case than the prosecution, would generally be considered biased and unfair. This seems at odds with a rational model. Indeed, listening to a political candidate should, in expectation, have no effect on a rational individual’s opinion, and thus, the candidate’s airtime should not matter. By contrast, under persuasion bias, the repetition of arguments made possible by more airtime can have an effect. Other phenomena that can be readily understood with persuasion bias are marketing, propaganda, and censorship. In all these cases, there seems to be a common notion that repeated exposures to an idea have a greater effect on the listener than a single exposure. More generally, persuasion bias can explain why individuals’ beliefs often seem to evolve in a predictable manner toward the standard, and publicly known, views of groups with which they interact (be they professional, social, political, or geographical groups)—a phenomenon considered indisputable and foundational by most sociologists[emphasis added]

While this is great for the Democrats in getting Obama to the White House, the charge that Obama won with money and not on his ideas will sting for any Democrat voter who believes they decided on the issues. Worse, though, is that by having the crutch of blaming the Obama campaign’s fundraising for their loss, the Republican party may not seriously think through why they lost on any deeper level. We need the Republicans to get out of the small-minded, socially conservative rut they’ve occupied for the last 12+ years.

There is no doubt in my mind that Professor Krugman deserves this, but who doesn’t think that this is just a little bit of an “I told you so” from Sweden to the USA?

Update: Alex Tabarrok gives a simple summary of New Trade Theory. Do read Tyler Cowen for a summary of Paul Krugman’s work, his more esoteric writing and some analysis of the award itself.

I have to say I did not expect him to win until Bush left office, as I thought the Swedes wanted the resulting discussion to focus on Paul’s academic work rather than on issues of politics. So I am surprised by the timing but not by the choice.

…

This was definitely a “real world” pick and a nod in the direction of economists who are engaged in policy analysis and writing for the broader public. Krugman is a solo winner and solo winners are becoming increasingly rare. That is the real statement here, namely that Krugman deserves his own prize, all to himself. This could easily have been a joint prize, given to other trade figures as well, but in handing it out solo I believe the committee is a) stressing Krugman’s work in economic geography, and b) stressing the importance of relevance for economics

Well, the nationalisation of Fannie and Freddie is obviously the news, but as a backdrop (and what I really wanted to point out), I found this amazing:

Two out of every 31 mortgages in the USA is overdue on repayments.

Robert Gibbons [MIT] wrote, in a 2004 essay:

When I first read Coase’s (1984: 230) description of the collected works of the old-school institutionalists – as “a mass of descriptive material waiting for a theory, or a fire” – I thought it was (a) hysterically funny and (b) surely dead-on (even though I had not read this work). Sometime later, I encountered Krugman’s (1995: 27) assertion that “Like it or not, … the influence of ideas that have not been embalmed in models soon decays.” I think my reaction to Krugman was almost as enthusiastic as my reaction to Coase, although I hope the word “embalmed” gave me at least some pause. But then I made it to Krugman’s contention that a prominent model in economic geography “was the one piece of a heterodox framework that could easily be handled with orthodox methods, and so it attracted research out of all proportion to its considerable merits” (p. 54). At this point, I stopped reading and started trying to think.

This is really important, fundamental stuff. I’ve been interested in it for a while (e.g. my previous thoughts on “mainstream” economics and the use of mathematics in economics). Beyond the movement of economics as a discipline towards formal (i.e. mathematical) models as a methodology, there is even a movement to certain types or styles of model. See, for example, the summary – and the warnings given – by Olivier Blanchard [MIT] regarding methodology in his recent paper “The State of Macro“:

That there has been convergence in vision may be controversial. That there has been convergence in methodology is not: Macroeconomic articles, whether they be about theory or facts, look very similar to each other in structure, and very different from the way they did thirty years ago.

…

[M]uch of the work in macro in the 1960s and 1970s consisted of ignoring uncertainty, reducing problems to 2×2 differential systems, and then drawing an elegant phase diagram. There was no appealing alternative – as anybody who has spent time using Cramer’s rule on 3×3 systems knows too well. Macro was largely an art, and only a few artists did it well. Today, that technological constraint is simply gone. With the development of stochastic dynamic programming methods, and the advent of software such as Dynare – a set of programs which allows one to solve and estimate non-linear models under rational expectations – one can specify large dynamic models and solve them nearly at the touch of a button.

…

Today, macro-econometrics is mainly concerned with system estimation … Systems, characterized by a set of structural parameters, are typically estimated as a whole … Because of the difficulty of finding good instruments when estimating macro relations, equation-by-equation estimation has taken a back seat – probably too much of a back seat

…

DSGE models have become ubiquitous. Dozens of teams of researchers are involved in their construction. Nearly every central bank has one, or wants to have one. They are used to evaluate policy rules, to do conditional forecasting, or even sometimes to do actual forecasting. There is little question that they represent an impressive achievement. But they also have obvious flaws. This may be a case in which technology has run ahead of our ability to use it, or at least to use it best:

- The mapping of structural parameters to the coefficients of the reduced form of the model is highly non linear. Near non-identification is frequent, with different sets of parameters yielding nearly the same value for the likelihood function – which is why pure maximum likelihood is nearly never used … The use of additional information, as embodied in Bayesian priors, is clearly conceptually the right approach. But, in practice, the approach has become rather formulaic and hypocritical.

- Current theory can only deliver so much. One of the principles underlying DSGEs is that, in contrast to the previous generation of models, all dynamics must be derived from first principles. The main motivation is that only under these conditions, can welfare analysis be performed. A general characteristic of the data, however, is that the adjustment of quantities to shocks appears slower than implied by our standard benchmark models. Reconciling the theory with the data has led to a lot of unconvincing reverse engineering

…

This way of proceeding is clearly wrong-headed: First, such additional assumptions should be introduced in a model only if they have independent empirical support … Second, it is clear that heterogeneity and aggregation can lead to aggregate dynamics which have little apparent relation to individual dynamics.

There are, of course and as always, more heterodox criticisms of the current synthesis of macroeconomic methodology. See, for example, the book “Post Walrasian Macroeconomics: Beyond the Dynamic Stochastic General Equilibrium Model” edited by David Colander.

I’m not sure where all of that leaves us, but it makes you think …

(Hat tip: Tyler Cowen)